API Testing Tips from a Postman Professional

As an engineer at Postman, I talk to a lot of people who use Postman to test their APIs. Over the years, I’ve picked up 10 tips and tricks for simplifying and automating the task of API testing.

Related: Use the API Testing Templates

TIP #1: write tests

The first step to API testing is to actually do it. Without good tests, it’s impossible to have full confidence in your API’s behavior, consistency, or backward compatibility. As your codebase grows and changes over time, tests will save you time and frustration by spotting breaking changes.

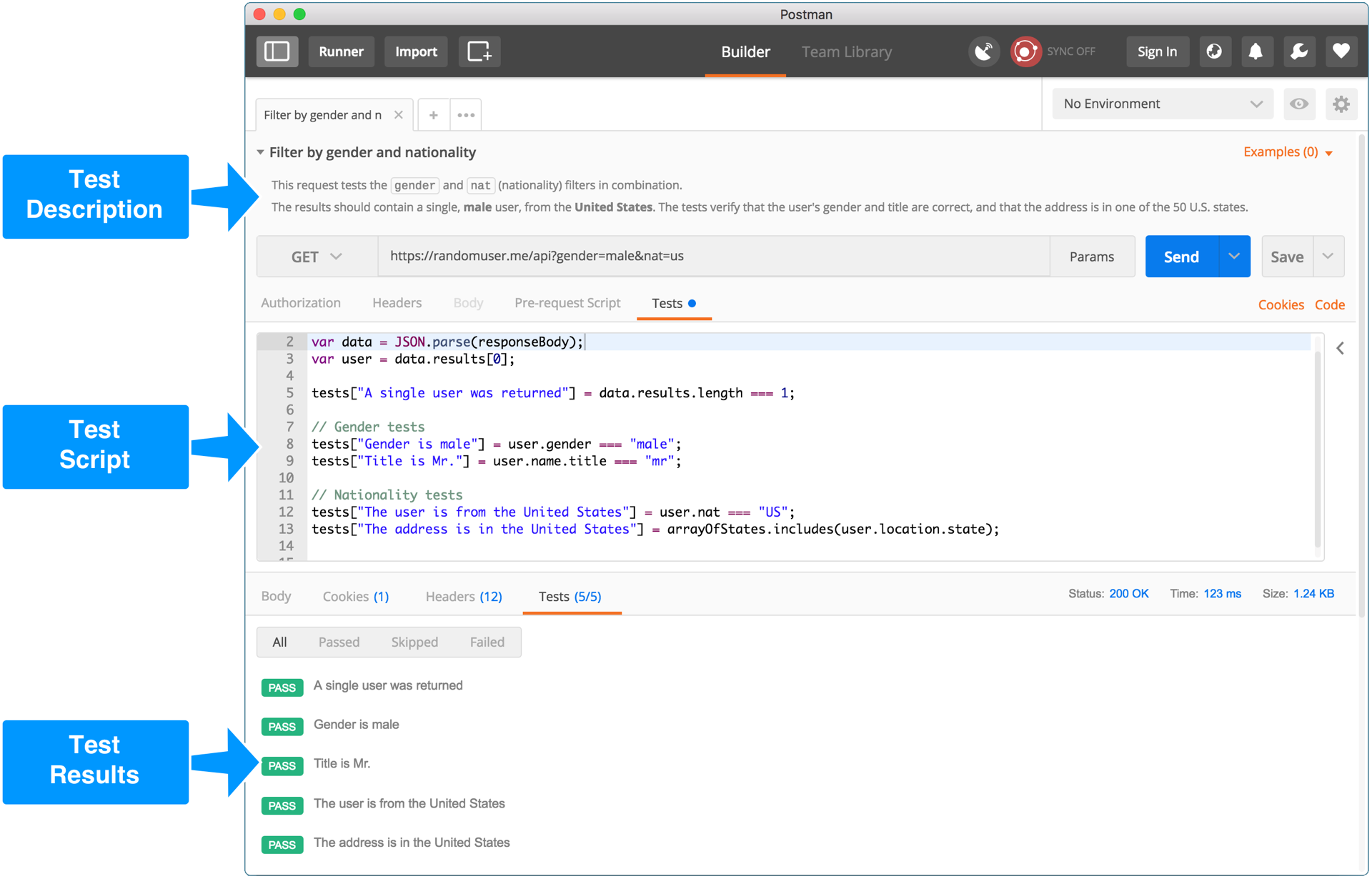

Writing tests in Postman is easy and uses JavaScript syntax. Testing simple things, like HTTP status codes, response times, and headers can each be done in a single line of code, like this:

https://gist.github.com/JamesMessinger/84963608c522637bc46309e1c24d82f8

But you can also write more advanced JavaScript logic to validate responses, check custom business rules, persist data to variables, or even dynamically control Postman’s workflow.

TIP #2: don’t mix tests and documentation

Many people use Postman Collections to document their APIs, either as a collection of example requests that can be easily shared among team members, or as public API documentation for customers. For both of those use cases, it makes sense for your collection to contain detailed explanations for each of your API endpoints, walkthroughs of common API workflows, authentication requirements, lists of possible error responses, etc.

Your API tests, on the other hand, serve an entirely separate purpose.

First of all, the audience is different. Whereas API documentation is for the consumers of an API, tests are for the authors of an API.

Secondly, the content is different. A solid test suite will include many edge cases, intentional bad inputs (to test error handling), and possibly reveal sensitive information, all of which would be irrelevant or confusing for your API’s consumers.

And finally, the authors are possibly different. Documentation (especially public docs) may be written by your marketing team or technical writers, whereas tests are written by the developers who built the API or the testers who are responsible for validating the API.

For all of these reasons, I highly recommend that you keep your API tests in a separate collection from your API documentation. Yes, that means that you have to manage two different collections, but in my experience, the contents of these collections end up being so vastly different that there’s almost no overlap or duplication between them. And, as you’ll see later in this article, having your tests separated in their own collection opens up some powerful automation possibilities.

> Bonus Tip: The description fields that you’d normally use for your API documentation can be repurposed as test descriptions. It’s a great way to document your tests, so developers and testers know what’s being tested, what the expected output is, etc.

TIP #3: organize tests into folders

As your API grows in complexity, it will become important to organize your tests so they make sense and can be found easily. I suggest that you use folders to group requests by resource, test suite, and workflows.

Resources

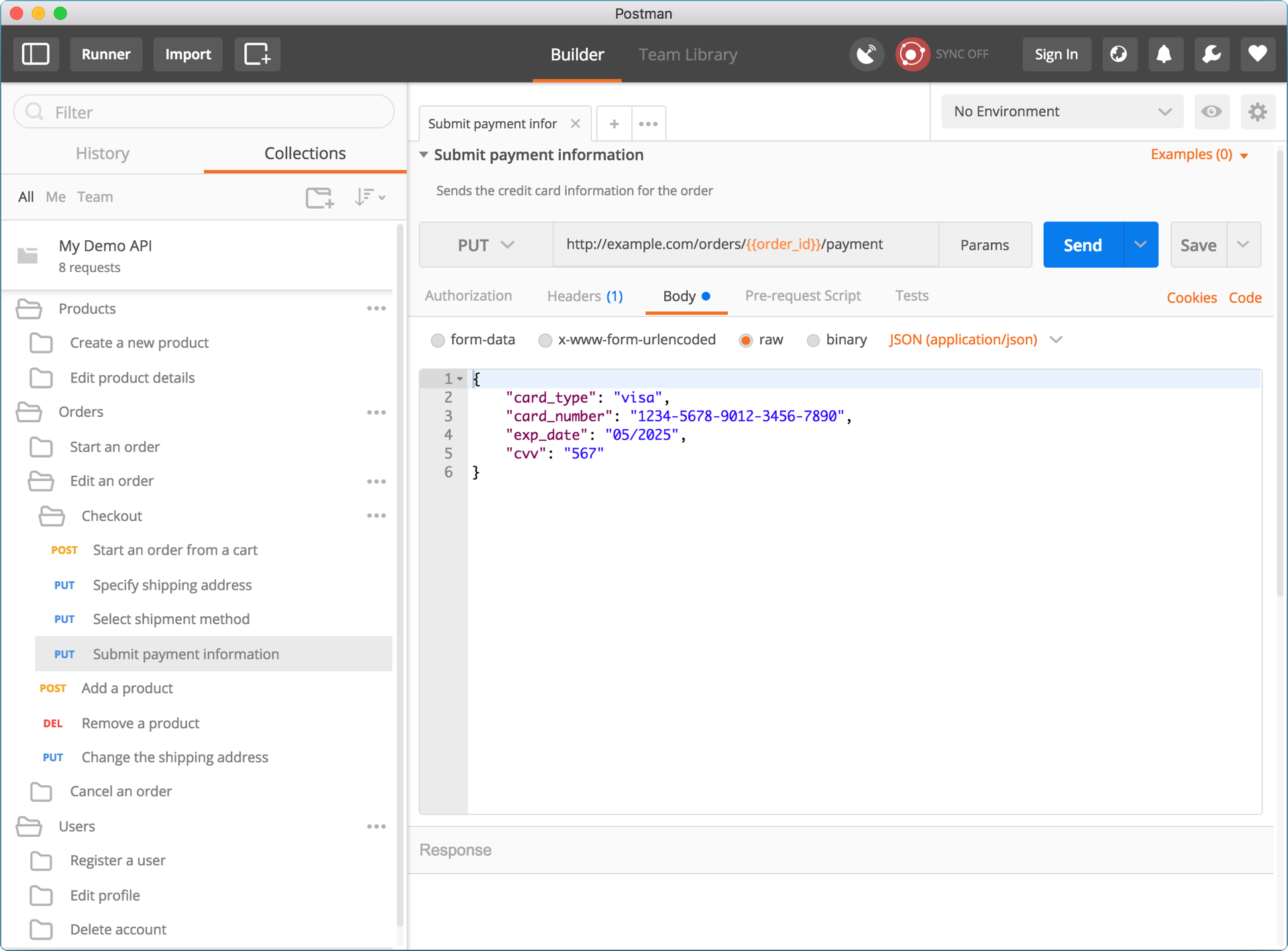

Create a top-level folder for each of your API’s resources (users, orders, products, etc.).

Test Suites

The second-level folders would be test suites for each of those resources, such as “Start a new order“, “Edit an existing order“, “Cancel an order“, etc.

For simple tests that only require a single API call, there’s no need for a third level of folders. You can simply create requests directly under the test suite folder. Be sure to give your requests meaningful names to differentiate them and describe what they’re testing. For example, the “Edit an existing order” test suite folder might contain requests such as “Add a product“, “Remove a product“, “Change the shipping address“, etc.

Workflows

The third-level folders are for more complicated tests that span multiple API calls. For example, your API might have a checkout flow that involves separate calls to create an order from a shopping cart, enter the shipping address, select the shipment method, and submit the payment information. In this case, you’d create a “workflow” folder named something like “Checkout” that contains the three requests.

TIP #4: JSON Schema validation

Many modern APIs use some form of JSON Schema to define the structure of their requests and responses. Postman includes the tv4 library, which makes it easy to write tests to verify that your API responses comply with your JSON Schema definitions.

https://gist.github.com/JamesMessinger/46bb549a7ffadf1aaab0c3f353b0064d

Of course, you probably wouldn’t want to hard code your JSON Schema in your test script, especially since you may need to use the same schema for many requests in your collection. So, instead, you could store the schema as a JSON string in a Postman environment variable. Then you can simply use the variable in your test script, like this:

https://gist.github.com/JamesMessinger/fba37542221b2128603a480aa1100ad2

TIP #5: reuse code

In the previous tip, I showed you how to easily reuse the same JSON Schema for multiple requests in your collection by storing it in an environment variable. You can also reuse JavaScript code the same way by leveraging the eval() function.

Most APIs have some common rules that apply to most (or all) endpoints. Certain HTTP headers should always be set, or the response body should always be in a certain format, or the response time must always be within an acceptable limit. Rather than re-writing these tests for every request, you can write them once in the very first request of your collection and reuse them in every request after that.

First request in the collection:

https://gist.github.com/JamesMessinger/bef06d26de2145bbf0a5de2addd77eaf

Other requests in the collection:

https://gist.github.com/JamesMessinger/82efb4b41075f1eb5df392bd879b017d

There’s no limit to the amount of code that can be stored in a variable and reused this way. In fact, you can use this trick to reuse entire JavaScript libraries, including many third-party libraries from NPM, Bower, and GitHub.

TIP #6: Postman BDD

Postman’s default test syntax is really straightforward and easy to learn, but many people prefer the syntax of popular JavaScript test libraries such as Mocha and Chai. I happen to be one of those people, which is why I created Postman BDD. Postman BDD allows you to write Postman tests using Mocha’s BDD syntax. It leverages the “reusable code” trick from the previous tip to load Chai and Chai-HTTP, which means you can write tests like this:

https://gist.github.com/JamesMessinger/ad0746045e29497e1b1e22f8fa9ae39b

In addition to the fluent syntax, Postman BDD has several other features and benefits that make Postman tests even easier and more powerful. Follow the installation instructions or check out the sample collection to get started.

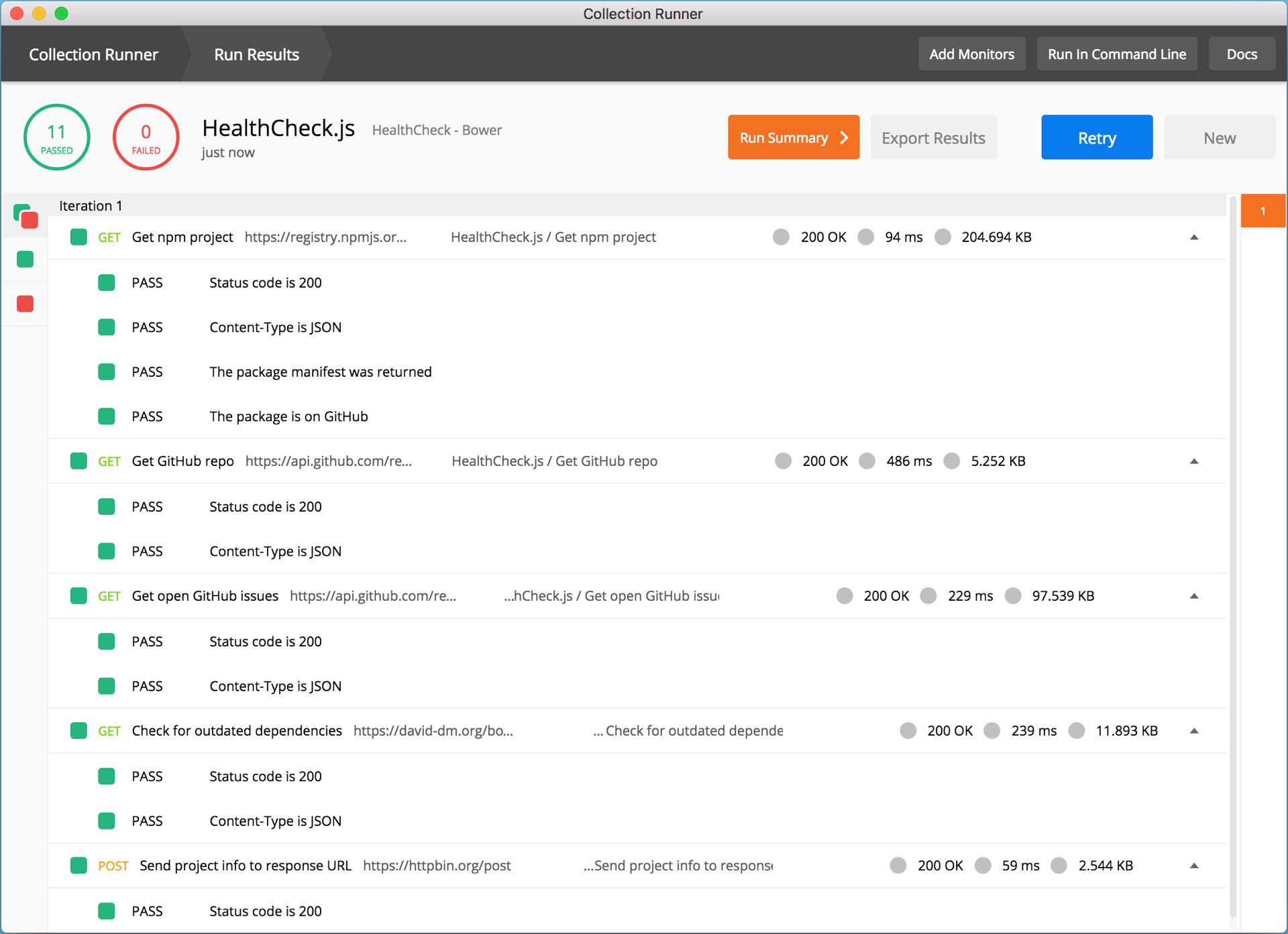

TIP #7: automate your tests with Postman’s collection runner

Up to now, we’ve focused on running a single request and testing the response. That approach works great while you’re writing your tests, but once they’re all written, you’ll want an easy way to run all of your requests quickly and see the results in a single view. That’s where Postman’s collection runner comes in.

TIP #8: automate your tests with Newman

The Postman collection runner is a great way to run all of your tests and see the results, but it still requires you to manually initiate the run. If you want to run your Postman tests as part of your Continuous Integration or Continuous Delivery pipeline, then you’ll need to use the Newman CLI.

Newman can easily be integrated into Jenkins, AppVeyor, Bamboo, CodeShip, Travis CI, Circle CI, or any other code deployment pipeline tool. It supports a variety of output formats, including a human-friendly console output, as well as outputting to a JSON or HTML file.

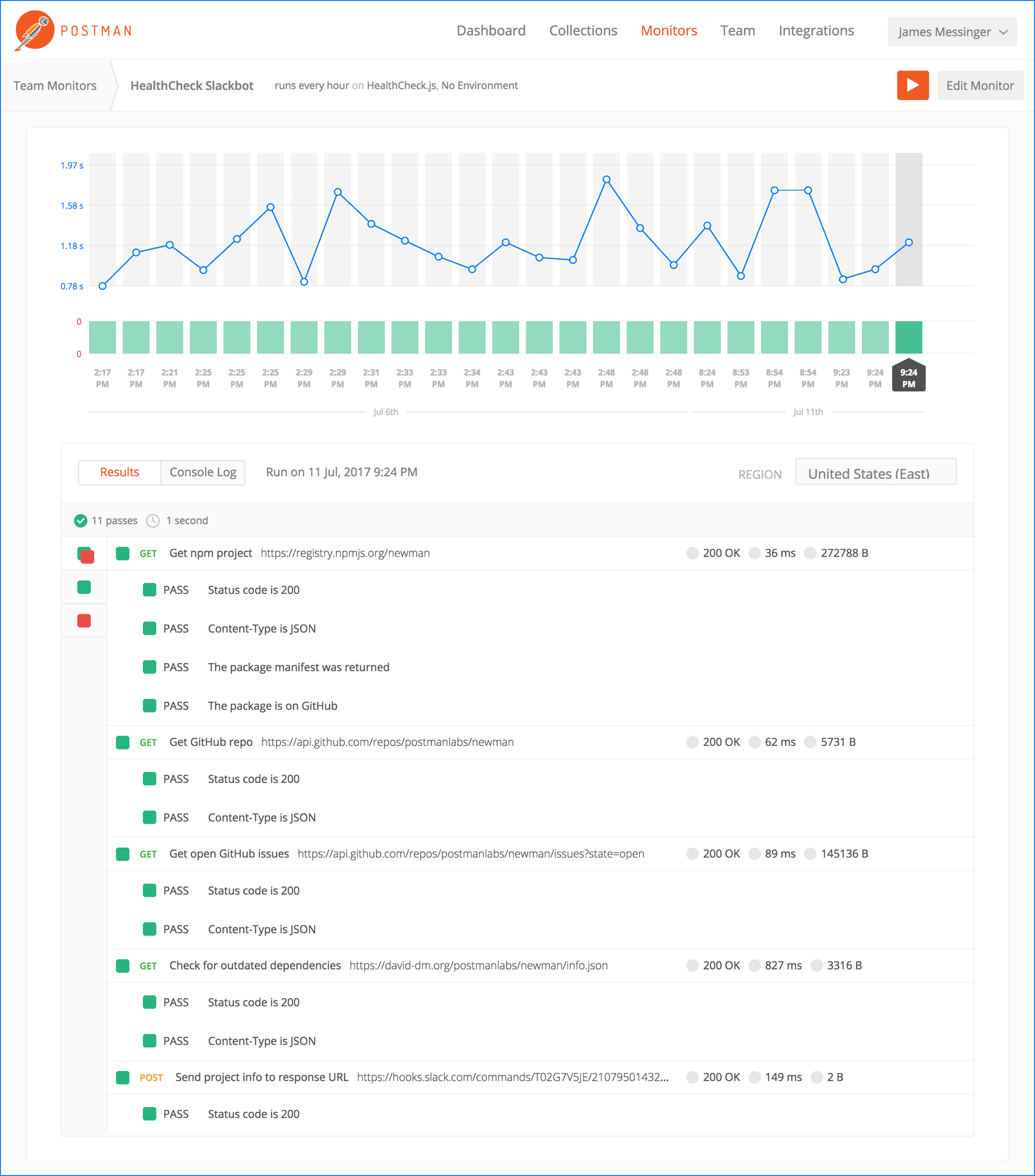

TIP #9: automate your tests with Postman Monitors

You can use Postman Monitors to automatically run your Postman tests at regular intervals, such as every night, or every 5 minutes. You’ll automatically be notified if any of your tests ever fail, and you can even integrate with a variety of third-party services, such as PagerDuty, Slack, Datadog, and more.

Postman Monitors shows your test results in the same familiar layout as the Postman collection runner, so it’s easy to compare the results to the Postman app.

TIP #10: dynamically control test workflows

By default, the Postman collection runner, Newman, and Postman Monitors will run each request in your collection in order. But you can use the postman.setNextRequest() function to change the order. This allows you to conditionally skip certain requests, repeat requests, terminate the collection early, etc.

https://gist.github.com/JamesMessinger/1e6702e0c96be032d905adb274727baa

The setNextRequest() function is deceptively simple, but it enables all sorts of powerful use cases, from generating a Spotify playlist, to checking your website for broken links, or even deciding what to feed your dog.

Great tips! Regarding Tip#2 – is there a plan to fork, branch and merge collections? Eventually would like to merge the released version of the collection with docs with the in-development version with tests. Would be useful to have a config that allows to toggle publishing of the tests and pre-requests scripts. So we can have internal docs and external docs that have the same collection but different documentation views.

Please help me, when I call // Load the JSON Schema

const customerSchema = JSON.parse(environment.customerSchema);

Error is displayed as “There was an error during JSON.parse(): Unexpected token u in JSON at position 0.

Thanks,

This error is probably happening because the “customerSchema” variable is undefined.

Make sure the “customerSchema” environment variable exists, and that it contains a valid JSON Schema.

I’ve been using Newman/Postman for a while now. If I could have one wish for a feature it would be a better import/merge for ongoing projects where the API definition from SWAGGER can change or have a namespace refactored. You either have to re-import and manually paste your tests back in, or manually update your API calls and headers for the tests.

It’s the one feature I’d be tempted to contribute.

I suppose the other time-sink regarding test maintenance is JSON schema, which can also change. I find it hassle getting these from OpenAI/Swagger.

All good tips. I would add an environment variable for response times. Some SLAs (service level agreements) might have penalties for response times over (for example) 1500ms. Some environments are slower than others and this is handy to add as a common test (if you use eval for this). It’s no substitute for actual performance testing but during development it can help red-flag calls or more usefully, environment problems. Especially if you track trends using reports in Jenkins.

Help needed:

How to run tests based on if condition results.

Eg:

var data = JSON.parse(responseBody);

if(responseCode.code === 404 && data.message === “Invalid Id”){

tests[“Test1: Invalid id passed”]

}

This one is not executing

You need to set the test to true or false. Like this:

tests[“Test1: Invalid id passed”] = true;

I read the bio 🙂 good luck with your traveling!

hehehe… I think you’re the only one who read it. Thanks for the good wishes

lorem ipsum. Thanks for this amazing post. It’s going to get us started with a test function on a new devops team in an internal-api-heavy environment.

Any other resources you might recommend?

i need to get the jsondata for child testcase using orderby via postman…help me!!!

Hi, very nice article keep it up with the good work…Thanks

We’re looking into Postman for a lot of our API testing, and I’m curious if there are recommendations for how to handle API drift across environments?

That is, if my API is changing, but the build with the change is still in Staging, I want to be testing it there, but I also want to be monitoring the API in Production. What I’m looking for, I suppose, is a way to promote requests through different environments. I’m fairly certain there’s nothing built-in right now for this, but maybe there are recommended workarounds?

Great article. Wish you the best health for further awesome instruction like this.

Good job! Thanks mate!

I am trying to get object from TM1 using Rest API but when I post it to target environment object definition changing . Does JSON changes the parameter in the original object? . Same copy it is not reflecting. Thanks!

Having created a monitor, ran it a few times to get a baseline, i’d now like to edit the monitor and change the expected response time. When i select Edit i only seem to be able to edit the timeout response time. Am i missing something?

I stuck in a logical issue and looking for some conventional solutions if already something there.

I built my BDD test cases with postman and collection is pushed in production branch of git to be deployed on production.

When we trigger deployment from jenkin where with newman we run all tests. It is failing test cases because server is not deployed with new code yet which has fixes for api. And api test are keep failing.

So is it a better idea to run all testes on staging api during production deployment so that latest changes in API can be verified. i don’t like this but currently it looks like i don’t have any better solution.

I thought this was a great blog post and it made me rethink how I build my postman test cases. I especially love the idea of defining testing functions in one place and then running them using eval!

With that said, I had a couple of questions. When I put an eval in my test cases Postman gives me a warning: “eval can be harmful”. However I don’t seem to run into any issues running my tests either individually or via runner. Is there a way to suppress this warning and/or define the functions so that it doesn’t come up?

When I run my tests with newman, they all run successfully, but I get the following at the end of the run: “Failure: TypeError, Detail: eval(…) is not a function”

Are there best practices on defining functions in postman tests and getting them to run cleanly?

Reading and its interesting. Great work.

Great post! Thanks!

I have a question regarding using data files. The contents in data files are for testing features provided by REST APIs. Do you have some general suggestions about where to store these data files? For me, it seems like the data files should be stored in REST app. Any suggestions? Thanks.

I found that using JS hoisting behavior may be so useful: in pre-request of collection:

Nice write up. One suggestion; when you refer to a different post for Example –

“In the previous tip, I showed you how to easily reuse the same JSON Schema for multiple requests in your collection”

adding a link to the post would be very helpful

Nice tips. Thank you for sharing.

Is there another approach for step #5 that doesn’t use eval()? On the Mozilla documentation they have a rather lengthy explanation for why using eval() is a security risk:

https://developer.mozilla.org/en-US/docs/Web/JavaScript/Reference/Global_Objects/eval#never_use_eval!

Hi Murdoc, Please contact our support team at http://www.postman.com/support and they’ll be able to help you.🙂

Great article. Thanks James. How’s it going with your goal. Im in Scandinavia. Let me know if you want to do your ‘git push’ from here 🙂